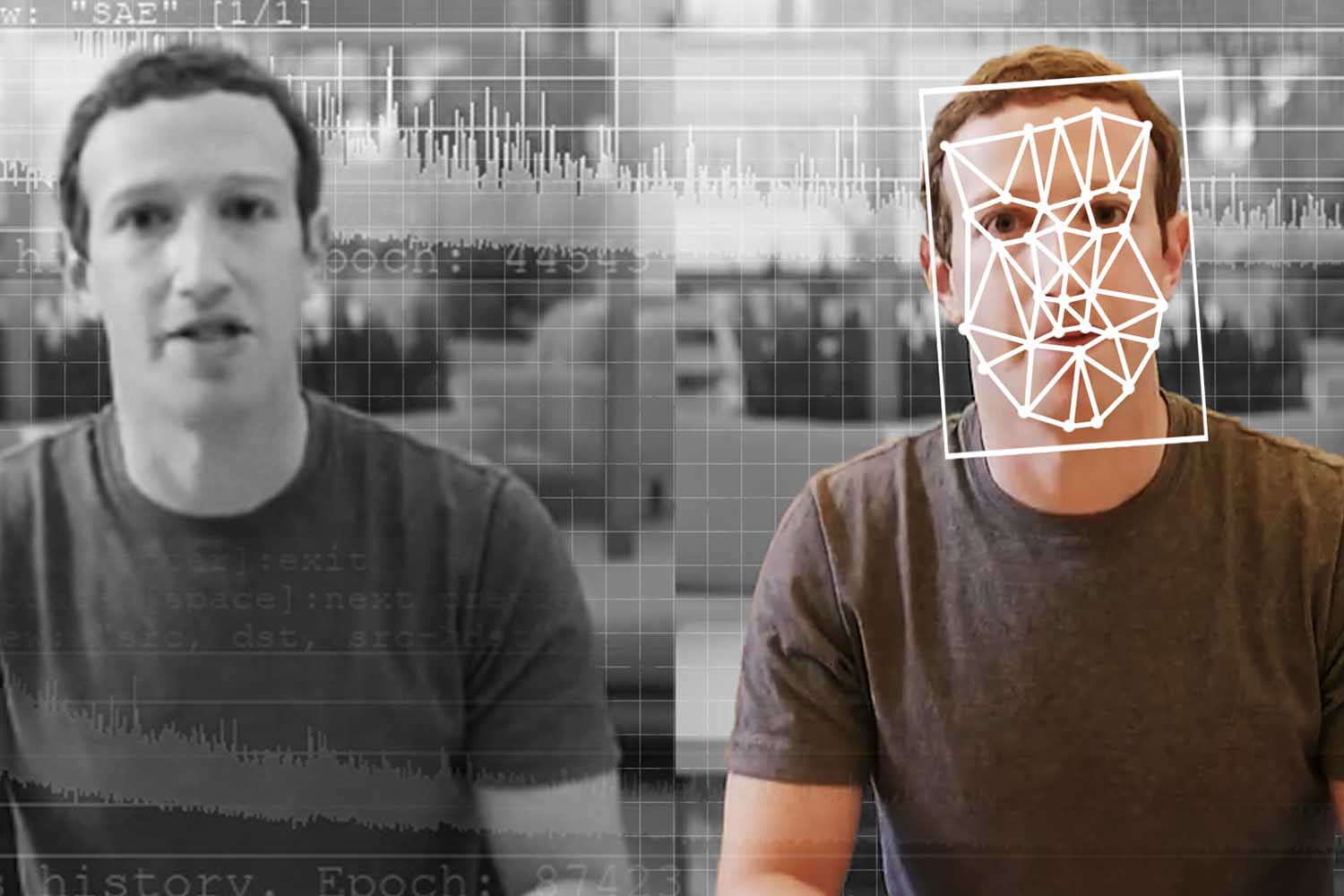

Deepfakes have invaded the job world. This week the FBI announced that they’ve received “complaints reporting the use of deepfakes and stolen Personally Identifiable Information (PII) to apply for a variety of remote work and work-at-home positions.”

The jobs in question are in the fields of information technology and computer programming, along with database and software-related job functions. Some reported positions include “access to customer PII, financial data, corporate IT databases and/or proprietary information.”

Thankfully, deepfake technology — when an image or recording is manipulated to misrepresent someone as doing or saying something — isn’t quite at a 100% believable level, at least yet. Employers noticed the actions and lip movement of the peopole being interviewed on camera didn’t always coordinate with the audio of the person speaking.

The problem is that, outside of obvious disconnects between video and audio, the technology to detect deepfakes isn’t fool-proof, either. A report by Sensity (as noted by Insider) suggests that 86% of the time, anti-deepfake technologies accepted deepfakes videos as real.

The issue is getting bad enough that even Adobe CEO Shantanu Narayen is weighing in. Adobe is, of course, the tech company behind Photoshop software and Premier Pro video-editing — basically, two tools that can be used to create manipulated audio, pictures and video.

“You can argue that the most important thing on the Internet now is authentication of content,” Narayen told Forbes. “When we create the world’s content, [we have to] help with the authenticity of that content, or the provenance of that content.” So far, Narayen’s efforts include the Content Authenticity Initiative, where designers and consumers of content can create and track a digital trail, as well as a “rigorous” ethics review for any new Adobe features and products.

But there are skeptics that these approaches will work, particularly as the AI behind deepfake tech gets better. As Abhishek Gupta, founder of the Montreal AI Ethics Institute, told Forbes: “Fake content is tailored towards people having fast interactions with very little time spent on judging whether something is authentic or not.”

Thanks for reading InsideHook. Sign up for our daily newsletter and be in the know.